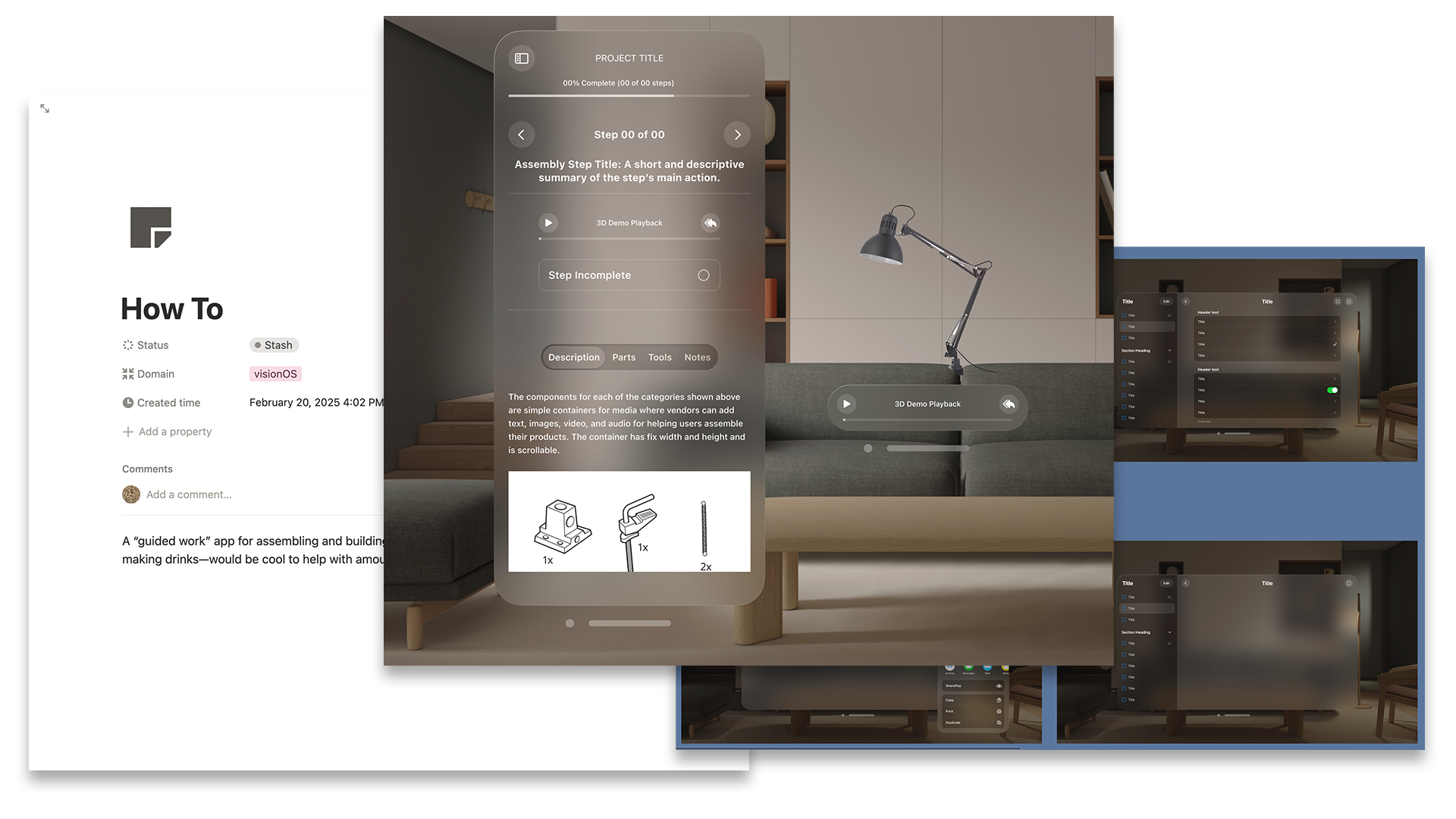

The Core Features

Assembling furniture, appliances, structures, and other DIY projects from paper instructions and even demo videos is often a less-than-ideal process that is riddled with errors and frustration. Many of these problems could be solved by simply having a good way to simulate the actions you need to take in each step, allowing you to see at life-sized scale how all of the parts go together.

Another way to put it: you are far less likely to have a "the door I screwed onto that new Ikea storage cabinet is backwards and upside-down" situation after you've assembled one or two of the same cabinets. Or even after just watching someone else complete the steps of the assembly.

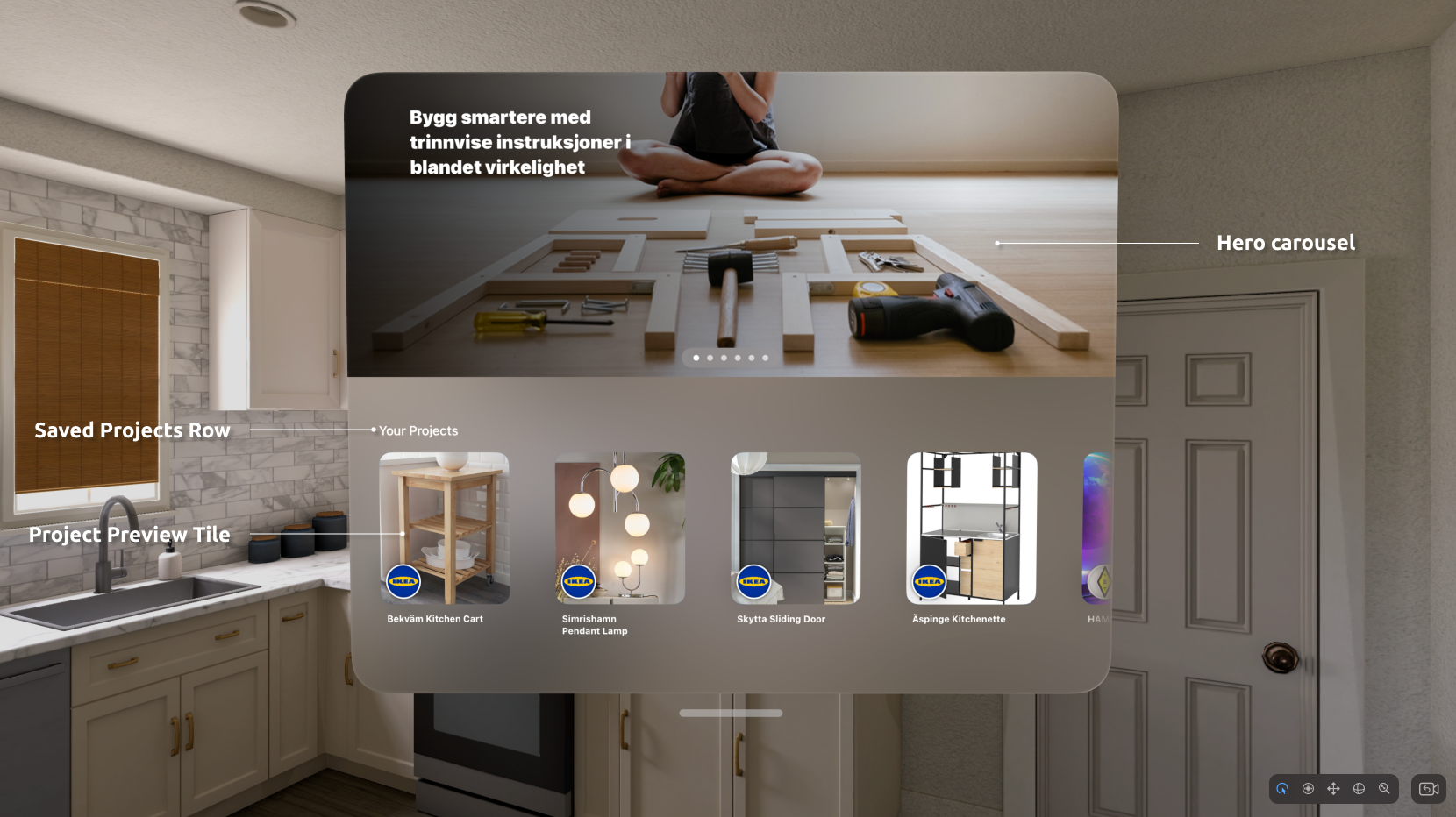

This app utilizes the powerful mixed reality capabilities of the Apple Vision Pro to give people true-to-scale simulations of each step of their DIY project that helps them clearly understand how parts are meant to fit together before taking action to avoid costly mistakes and damage to materials.

From something as simple as a birdhouse, to sophisticated hi-tech machinery, the animated digital replicas plus the reference materials the app places in your surroundings help you to get things right the first time.

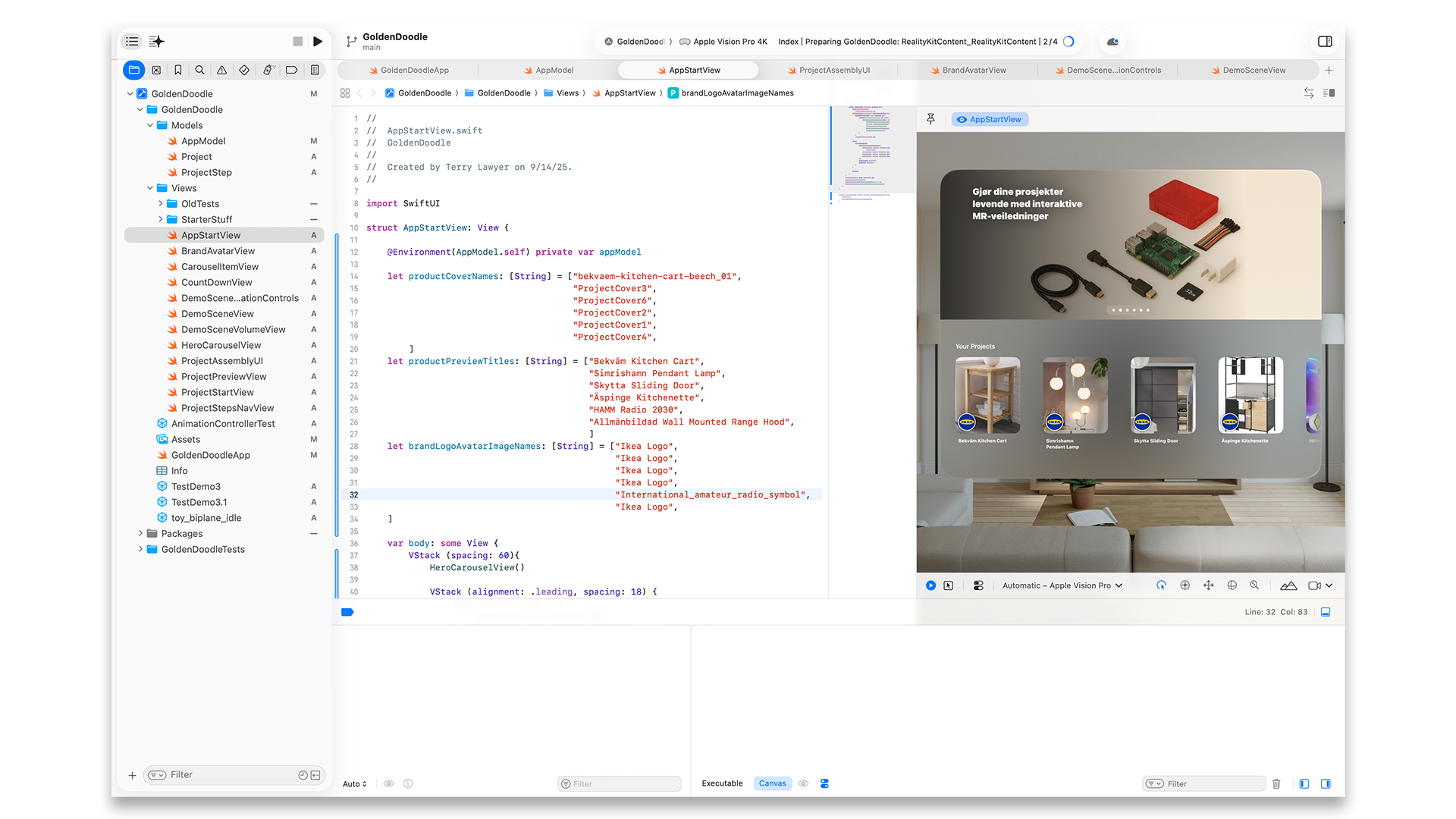

Like most spatial computing projects, the design-to-prototype process for this concept had many small and large parts to produce. Adding to that, I had an objective of simulating how the content creation and ingestion pipeline might work for this product that would require a large number of small and large companies to produce a significant number of 3D assets that would need to comply with specifications for display and playback in the app.

The main takeaways I got from the 3D pipeline were:

The last stop in the process, user testing, is also more difficult compared to the typical 2D app design process. Spatial computing headsets like the Vision Pro means you will probably need to have in-person sessions with your participants and hope they can fit comfortably into the headset. The biggest obstacle for me in this project is the need to purchase multiple Bekvam kitchen carts from Ikea to get realistic testing. So user testing will commence after I find a much smaller and cheaper product for testers to assemble.